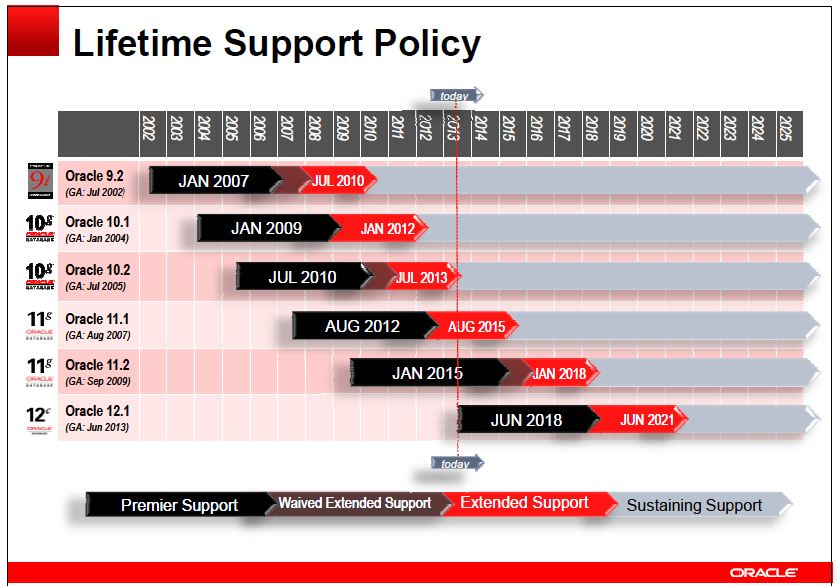

12c开始的新一轮Lifetime Support

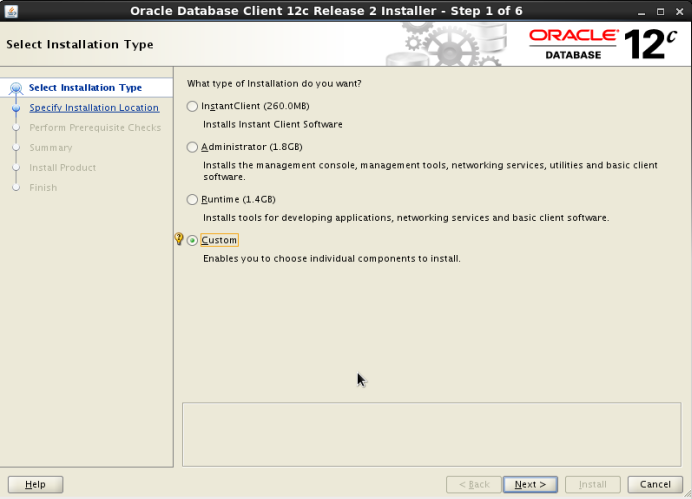

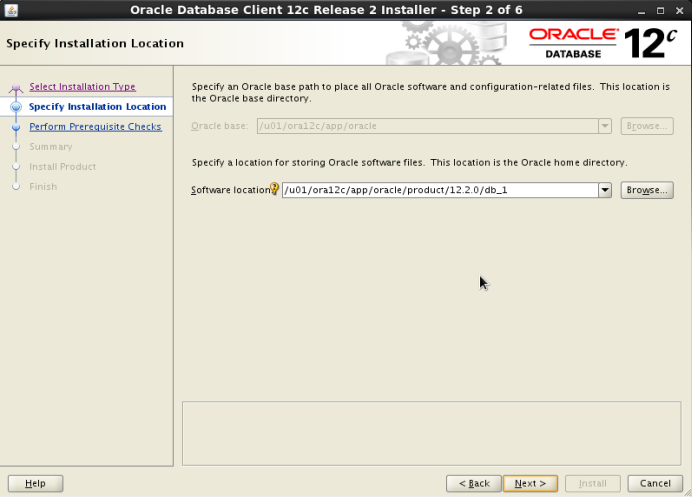

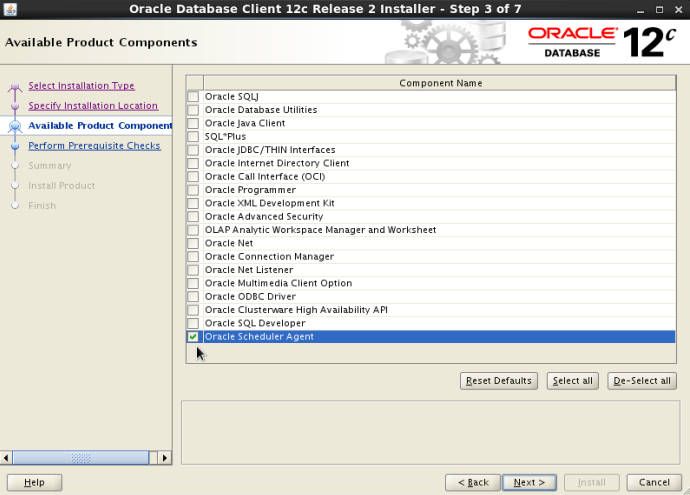

基于vbox的12c RAC的安装

总体上说,12c RAC的安装基本和11g的一致。

先整个简单版的12c RAC(不启用dns,不启用flex cluster,不启用admin policy),基于Oracle Linux Release 6 Update 4 for x86_64 (64 Bit),安装在virtualbox 4.2.14上。

一、virtualbox(vbox)的部署:

2.内存大小我设置了3000M,建议设置为4000M以上。

3.现在创建虚拟硬盘

4.选择VDI

5.选择动态分配

6.分配硬盘大小为30G

7.网卡1为host-only,网卡2为内部网络

8.存储-控制器IDE,选择linux的ISO镜像

9.点击启动,开始安装,注swap的大小最好大于4G,我填的比较小,只有3G,因此后面安装的时候会有warning,但也不影响。

10.安装时选择下面的package:

Base System > Base

Base System > Compatibility libraries

Base System > Hardware monitoring utilities

Base System > Large Systems Performance

Base System > Network file system client

Base System > Performance Tools

Base System > Perl Support

Servers > Server Platform

Servers > System administration tools

Desktops > Desktop

Desktops > Desktop Platform

Desktops > Fonts

Desktops > General Purpose Desktop

Desktops > Graphical Administration Tools

Desktops > Input Methods

Desktops > X Window System

Applications > Internet Browser

Development > Additional Development

Development > Development Tools

11.在 /etc/sysctl.conf添加:

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

12.在/etc/security/limits.conf添加:

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

13.安装rpm包:

rpm -Uvh *binutils*

rpm -Uvh *compat-libcap1*

rpm -Uvh *compat-libstdc++-33*

rpm -Uvh *gcc*

rpm -Uvh *gcc-c++*

rpm -Uvh *glibc*

rpm -Uvh *glibc-devel*

rpm -Uvh *ksh*

rpm -Uvh *libgcc*

rpm -Uvh *libstdc++*

rpm -Uvh *libstdc++-devel*

rpm -Uvh *libaio*

rpm -Uvh *libaio-devel*

rpm -Uvh *libXext*

rpm -Uvh *libXtst*

rpm -Uvh *libX11*

rpm -Uvh *libXau*

rpm -Uvh *libxcb*

rpm -Uvh *libXi*

rpm -Uvh *make*

rpm -Uvh *sysstat*

rpm -Uvh *unixODBC*

rpm -Uvh *unixODBC-devel*

14.新建oracle用户

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

useradd -u 54321 -g oinstall -G dba,oper oracle

15.设置oracle用户的密码:

passwd oracle

16.设置/etc/hosts

127.0.0.1 localhost.localdomain localhost

# Public

192.168.56.101 ol6-121-rac1.localdomain ol6-121-rac1

192.168.56.102 ol6-121-rac2.localdomain ol6-121-rac2

# Private

192.168.1.101 ol6-121-rac1-priv.localdomain ol6-121-rac1-priv

192.168.1.102 ol6-121-rac2-priv.localdomain ol6-121-rac2-priv

# Virtual

192.168.56.103 ol6-121-rac1-vip.localdomain ol6-121-rac1-vip

192.168.56.104 ol6-121-rac2-vip.localdomain ol6-121-rac2-vip

# SCAN

192.168.56.105 ol6-121-scan.localdomain ol6-121-scan

192.168.56.106 ol6-121-scan.localdomain ol6-121-scan

192.168.56.107 ol6-121-scan.localdomain ol6-121-scan

17.修改/etc/security/limits.d/90-nproc.conf

将下面的一行:

* soft nproc 1024

改成:

* - nproc 16384

18.修改SELINUX:

修改/etc/selinux/config为:

SELINUX=permissive

19.关闭防火墙:

# service iptables stop

# chkconfig iptables off

二、设置oracle用户的环境:

mkdir -p /u01/app/12.1.0.1/grid

mkdir -p /u01/app/oracle/product/12.1.0.1/db_1

chown -R oracle:oinstall /u01

chmod -R 775 /u01/

2.设置oracle用户环境变量:

# Oracle Settings

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=ol6-121-rac1.localdomain

export ORACLE_UNQNAME=CDBRAC

export ORACLE_BASE=/u01/app/oracle

export GRID_HOME=/u01/app/12.1.0.1/grid

export DB_HOME=$ORACLE_BASE/product/12.1.0.1/db_1

export ORACLE_HOME=$DB_HOME

export ORACLE_SID=cdbrac1

export ORACLE_TERM=xterm

export BASE_PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

alias grid_env='. /home/oracle/grid_env'

alias db_env='. /home/oracle/db_env'

13.由于我们不建立grid用户,我们只在oracle用户下建立2个环境变量grid_env和db_env来加载环境,不需要切换用户。

建立/home/oracle/grid_env文件如下:

export ORACLE_SID=+ASM1

export ORACLE_HOME=$GRID_HOME

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

14.建立/home/oracle/db_env文件如下:

export ORACLE_SID=cdbrac1

export ORACLE_HOME=$DB_HOME

export PATH=$ORACLE_HOME/bin:$BASE_PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

15.测试一下:

[root@ol6-121-rac1 ~]# su - oracle

[oracle@ol6-121-rac1 ~]$ grid_env

[oracle@ol6-121-rac1 ~]$ echo $ORACLE_HOME

/u01/app/12.1.0.1/grid

[oracle@ol6-121-rac1 ~]$

[oracle@ol6-121-rac1 ~]$ db_env

[oracle@ol6-121-rac1 ~]$ echo $ORACLE_HOME

/u01/app/oracle/product/12.1.0.1/db_1

三、安装vbox的Guest Additions

2.运行:

cd /media/VBOXADDITIONS_4.2.14_86644

sh ./VBoxLinuxAdditions.run

3.安装完Guest Additions,之后,就可以使用vbox的共享文件夹功能了。但是,为了能让oracle用户访问共享文件夹,需要给oracle用户加vboxsf组:

# usermod -G vboxsf,dba oracle

# id oracle

uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54323(vboxsf)

4.好了,你可以在你的目录解压缩你下载的安装介质了。

unzip linuxamd64_12c_grid_1of2.zip

unzip linuxamd64_12c_grid_2of2.zip

unzip linuxamd64_12c_database_1of2.zip

unzip linuxamd64_12c_database_2of2.zip

注意在windows下,解压出来的要合并成2个目录,database和grid

5.在vbox管理器界面,选择我们的ol6-121-rac1的机器,点击设置,进共享文件夹,点加号,然后勾上 "自动挂载" 和"固定分配"

6.安装在共享文件夹中的grid目录中的cvu:

cd /media/sf_12cR1/grid/rpm

rpm -Uvh cvuqdisk-1.0.9-1.rpm

四、创建asm的共享盘

2.创建共享asm盘,共4个asm盘,每个盘5G大小:

E:\>cd E:\Oralce_Virtual_Box\ol6-121-rac

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage createhd --filename asm1.vdi --size 5120 --format VDI --variant Fixed

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage createhd --filename asm2.vdi --size 5120 --format VDI --variant Fixed

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage createhd --filename asm3.vdi --size 5120 --format VDI --variant Fixed

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage createhd --filename asm4.vdi --size 5120 --format VDI --variant Fixed

3.将创建的asm盘attach到虚拟机ol6-121-rac1上

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage storageattach ol6-121-rac1 --storagectl "SATA" --port 1 --device 0 --type hdd --medium asm1.vdi --mtype shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage storageattach ol6-121-rac1 --storagectl "SATA" --port 2 --device 0 --type hdd --medium asm2.vdi --mtype shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage storageattach ol6-121-rac1 --storagectl "SATA" --port 3 --device 0 --type hdd --medium asm3.vdi --mtype shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage storageattach ol6-121-rac1 --storagectl "SATA" --port 4 --device 0 --type hdd --medium asm4.vdi --mtype shareable

4.将这些共享盘设置为可共享的:

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage modifyhd asm1.vdi --type shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage modifyhd asm2.vdi --type shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage modifyhd asm3.vdi --type shareable

E:\Oralce_Virtual_Box\ol6-121-rac>VBoxManage modifyhd asm4.vdi --type shareable

5.启动虚拟机

五、将刚刚加的共享盘用udev加到主机上:

# cd /dev

# ls sd*

sda sda1 sda2 sdb sdc sdd sde

2. fdisk分区

fdisk /dev/sdb

输入n p 1 回车 回车 w

同理fdisk /dev/sdc,fdisk /dev/sdd,fdisk /dev/sde

3.分区完成后,检查看看:

# cd /dev

# ls sd*

sda sda1 sda2 sdb sdb1 sdc sdc1 sdd sdd1 sde sde1

4.在/etc/scsi_id.config添加:

options=-g

5.查找SCSI ID

[root@ol6-121-rac1 dev]# /sbin/scsi_id -g -u -d /dev/sdb

[root@ol6-121-rac1 dev]# /sbin/scsi_id -g -u -d /dev/sdc

[root@ol6-121-rac1 dev]# /sbin/scsi_id -g -u -d /dev/sdd

[root@ol6-121-rac1 dev]# /sbin/scsi_id -g -u -d /dev/sde

我的显示如下:

1ATA_VBOX_HARDDISK_VBd468bcab-b01d8894

1ATA_VBOX_HARDDISK_VBc1b0c3f0-162d709a

1ATA_VBOX_HARDDISK_VB527c91e6-934cf458

1ATA_VBOX_HARDDISK_VB59bb6d05-167b1e5f

6.在文件/etc/udev/rules.d/99-oracle-asmdevices.rules中添加刚刚查到的SCSI ID信息,进行绑定:

KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBd468bcab-b01d8894", NAME="asm-disk1", OWNER="oracle", GROUP="dba", MODE="0660"

KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBc1b0c3f0-162d709a", NAME="asm-disk2", OWNER="oracle", GROUP="dba", MODE="0660"

KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VB527c91e6-934cf458", NAME="asm-disk3", OWNER="oracle", GROUP="dba", MODE="0660"

KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VB59bb6d05-167b1e5f", NAME="asm-disk4", OWNER="oracle", GROUP="dba", MODE="0660"

7.更新:

# /sbin/partprobe /dev/sdb1

# /sbin/partprobe /dev/sdc1

# /sbin/partprobe /dev/sdd1

# /sbin/partprobe /dev/sde1

8.重启服务:

# /sbin/udevadm control --reload-rules

# /sbin/start_udev

9.检查asm磁盘是否已经存在:

# ls -al /dev/asm*

brw-rw---- 1 oracle dba 8, 17 Oct 12 14:39 /dev/asm-disk1

brw-rw---- 1 oracle dba 8, 33 Oct 12 14:38 /dev/asm-disk2

brw-rw---- 1 oracle dba 8, 49 Oct 12 14:39 /dev/asm-disk3

brw-rw---- 1 oracle dba 8, 65 Oct 12 14:39 /dev/asm-disk4

#

六、克隆主机ol6-121-rac1为ol6-121-rac2:

E:\Oralce_Virtual_Box>cd ol6-121-rac1

E:\Oralce_Virtual_Box\ol6-121-rac1>VBoxManage clonehd ol6-121-rac1.vdi ol6-121-rac2.vdi

E:\Oralce_Virtual_Box\ol6-121-rac1>

2.在vbox管理器界面,点击新建,选择和ol6-121-rac1一样的类型和版本,内存也一样,后面选择“使用已有的虚拟硬盘文件”,然后选择ol6-121-rac2.vdi

3.也添加asm共享硬盘:

VBoxManage storageattach ol6-121-rac2 --storagectl "SATA" --port 1 --device 0 --type hdd --medium asm1.vdi --mtype shareable

VBoxManage storageattach ol6-121-rac2 --storagectl "SATA" --port 2 --device 0 --type hdd --medium asm2.vdi --mtype shareable

VBoxManage storageattach ol6-121-rac2 --storagectl "SATA" --port 3 --device 0 --type hdd --medium asm3.vdi --mtype shareable

VBoxManage storageattach ol6-121-rac2 --storagectl "SATA" --port 4 --device 0 --type hdd --medium asm4.vdi --mtype shareable

4.启动后主机,搞一下网络:

清空/etc/udev/rules.d

点击system-preferences-network connections,中的所有的网卡

重启主机

ifconfig,看到eth0和eth1两块网卡的mac地址,在进system-preferences-network connections,add wire网卡,MAC地址填入我们刚刚看到的,IPv4的地址写入和ol6-121-rac1类似的。

# service network restart 重启网络之后,就可以连上去了。注意两个主机的一致性,如都是eth0为public网卡,eth1为private网卡。

5.检查安装前的条件是否满足:

[oracle@ol6-121-rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n ol6-121-rac1,ol6-121-rac2 -verbose

有3个fail可以忽略,dns,/etc/resolv.conf和swap

至此,12c RAC数据库数据库已经安装完毕,enjoy.

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.1.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Advanced Analytics and Real Application Testing options

SQL> select instance_name,status from gv$instance order by 1;

INSTANCE_NAME STATUS

---------------- ------------

cdbrac1 OPEN

cdbrac2 OPEN

SQL>

ps:如果遇到启动数据库时报错ORA-00845: MEMORY_TARGET not supported on this system

那应该是/dev/shm不够大引起,修改/etc/fstab中的:

tmpfs /dev/shm tmpfs defaults 0 0

修改为:

tmpfs /dev/shm tmpfs defaults,size=3g 0 0

重启主机后再重启数据库即可。

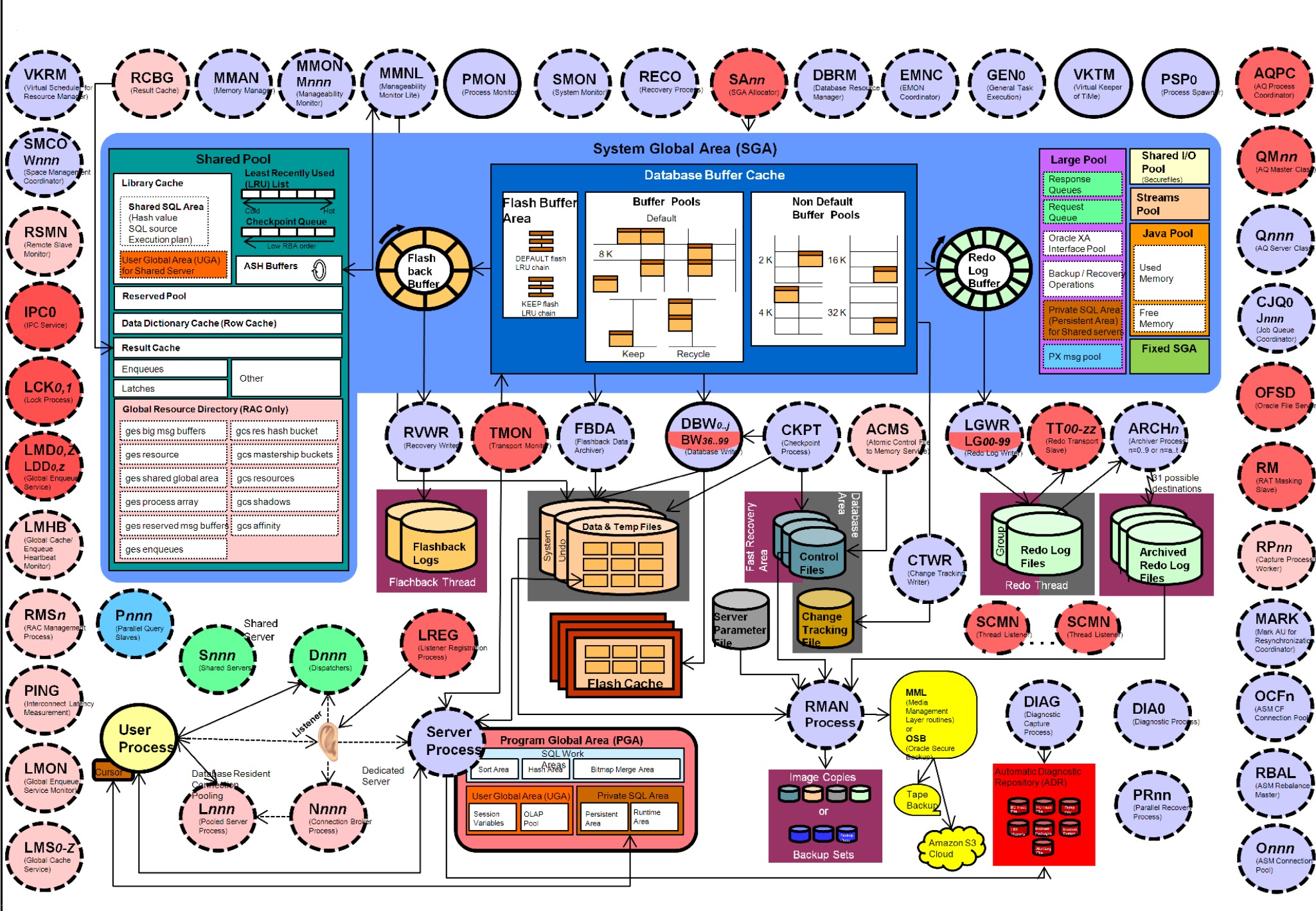

12c的架构图

在微博上看到12c的架构图了,在这里也放一下。一是给自己做个笔记,另一方面也方便各位oracle爱好者。

顺便整理了一下,9i到12c的数据库架构图,都是pdf版本的:

(1)Db9i_Server_Arch

(2)Db10g_Server_Arch

(3)Db11g_Server_Arch

(4)Db12c_Server_Arch

小谈12c的in memory option

(1) in memory option(以下简称imo)将随着12.1.0.2发布

(2)in memory option不会取代TimesTen(以下简称TT),因为这是2种层面的产品,TT还是会架在DB层之前,和应用紧密相连,为应用提供缓存,imo在DB层,可以做到高可用如RAC,DG等一些TT无法实现的架构。另外同样道理,imo也不会替代Exalytics。

(3)imo引入了、或者说学习了列存储DB,在内存的extend存储每列的最大最小值,类似Exadata中的Exadata Storage Index on every column方式进行列存储,oracle称之为:In-Memory Column Store Storage Index。

(4)Oracle In-Memory Columnar Compression,提供2倍到10倍的压缩率。

(5)执行计划有新的表达:table access in memory full。类似Exadata中的table access storage full。

(6)表连接中将使用布隆过滤和hash连接。

(7)需要几个初始化参数开启imo,在12.1.0.2中show parameter memory即可看到,如inmemory_query可以在system或session级别开启imo功能。会有几个新的v$im开头的视图可以查询在memory中的对象,如v$IM_SEGMENTS,v$IM_USER_SEGMENTS.

(8)对已OLTP,对于DML操作和原来一样。当DML修改时,在内存中的列存储,会标记为stale,并且会copy变动的记录到Transaction Journal。注:存储在内存中的列是永远保持最新的。读一致性会将列的内容和Transaction Journal进行merge,在个merge操作是online操作的。

(9)in memory option对数据库crash重启后对外提供服务的速度可能会有影响,虽然行存储的数据可以对外提供服务,但是load数据到内存中的列存储还是需要一定的时间。

(10)imo最厉害的一点是对application完全透明,你可以完全不做任何修改,就获得巨大的效率。

12c的网络设置

12c开始,对于pdb一般都是需要tnsname登录了,在这里记录一下主要的3个网络文件配置。

listener.ora

# Generated by Oracle configuration tools.

SID_LIST_LISTENER =

(SID_LIST =

(SID_DESC =

(GLOBAL_DBNAME = ora12c)

(ORACLE_HOME = /u01/ora12c/app/oracle/product/12.1.0/db_1)

(SID_NAME = ora12c)

)

(SID_DESC =

(GLOBAL_DBNAME = pdb1)

(ORACLE_HOME = /u01/ora12c/app/oracle/product/12.1.0/db_1)

(SID_NAME = ora12c)

)

)

LISTENER =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.56.132)(PORT = 1522))

)

ADR_BASE_LISTENER = /u01/ora12c/app/oracle

tnsnames.ora

# Generated by Oracle configuration tools.

ORA12C =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.56.132)(PORT = 1522))

)

(CONNECT_DATA =

(SERVICE_NAME = ora12c)

)

)

PDB1 =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.56.132)(PORT = 1522))

)

(CONNECT_DATA =

(SERVICE_NAME = pdb1)

)

)

sqlnet.ora

# Generated by Oracle configuration tools.

NAMES.DIRECTORY_PATH= (TNSNAMES, HOSTNAME)

ADR_BASE = /u01/ora12c/app/oracle

12c flex cluster小记(1)

这篇文章其实在草稿箱里面躺了快1年了,只是太长,长的我都没有信心完成它了。不过,放着可惜,还是拆分一下share出来吧。

关于flex cluster

2. flex和非flex可以互相转换crsctl set cluster flex|standard, 需重启crs

3. hub和leaf可以互相转换

4. 必须GNS固定VIP

之前有消息说db可以安装在leaf node,甚至有人写书都写出来说可以安装在leaf node上,因为有flex asm的关系,可以接收来自非本主机的db实例。但是事实证明,等正式发布的这个版本,db是不能安装在leaf node,如果尝试安装,会报错:

1.flex cluster安装。

flex cluster的安装,其实只要做好了准备工作,安装难度并不大。只是12c中需要使用GNS,因此在虚拟机环境下配dhcp和dns就稍微麻烦点了。

我是建立一个虚拟机做dhcp server,在本机物理主机(win 7)上用ISC BIND来做dns解析。

在虚拟机dhcp server的配置如下:

ddns-update-style interim;

ignore client-updates;

## DHCP for public:

subnet 192.168.56.0 netmask 255.255.255.0

{

default-lease-time 43200;

max-lease-time 86400;

option subnet-mask 255.255.255.0;

option broadcast-address 192.168.56.255;

option routers 192.168.56.1;

option domain-name-servers

192.168.56.3;

option domain-name "grid.localdomain";

pool

{

range 192.168.56.10 192.168.56.29;

}

}

## DHCP for private

subnet 192.168.57.0 netmask 255.255.255.0

{

default-lease-time 43200;

max-lease-time 86400;

option subnet-mask 255.255.0.0;

option broadcast-address 192.168.57.255;

pool

{

range 192.168.57.30 192.168.57.49;

}

}

在windows上的BIND配置:

options {

directory "c:\windows\SysWOW64\dns\etc";

forwarders {8.8.8.8; 8.8.4.4;};

allow-transfer { none; };

};

logging{

channel my_log{

file "named.log" versions 3 size 2m;

severity info;

print-time yes;

print-severity yes;

print-category yes;

};

category default{

my_log;

};

};

######################################

# ADD for oracle RAC SCAN,

# START FROM HERE

######################################

zone "56.168.192.in-addr.arpa" IN {

type master;

file "C:\Windows\SysWOW64\dns\etc\56.168.192.in-addr.local";

allow-update { none; };

};

zone "localdomain" IN {

type master;

file "C:\Windows\SysWOW64\dns\etc\localdomain.zone";

allow-update { none; };

};

zone "grid.localdomain" IN {

type forward;

forward only;

forwarders { 192.168.56.108 ;};

};

######################################

# ADD for oracle RAC SCAN,

# END FROM HERE

######################################

C:\Windows\SysWOW64\dns\etc>

在cluster节点的主机上,看hosts文件是这样的:

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 localhost.localdomain localhost

# Public

192.168.56.121 ol6-121-rac1.localdomain ol6-121-rac1

192.168.56.122 ol6-121-rac2.localdomain ol6-121-rac2

# Private

192.168.57.31 ol6-121-rac1-priv.localdomain ol6-121-rac1-priv

192.168.57.32 ol6-121-rac2-priv.localdomain ol6-121-rac2-priv

#Because use GNS, so vip and scanvip is provide by GNS

# Virtual

#192.168.56.103 ol6-121-rac1-vip.localdomain ol6-121-rac1-vip

#192.168.56.104 ol6-121-rac2-vip.localdomain ol6-121-rac2-vip

# SCAN

#192.168.56.105 ol6-121-scan.localdomain ol6-121-scan

#192.168.56.106 ol6-121-scan.localdomain ol6-121-scan

#192.168.56.107 ol6-121-scan.localdomain ol6-121-scan

[oracle@ol6-121-rac1 ~]$

一会等安装完成后,我们再来通过ifconfig来看看带起来的IP。

安装比较简单,基本是一路next下去了。我的环境是2个节点的cluster,安装的时候,我建立成了一个hub node,一个leaf node。这其实可以互相转换的。

安装过程我们看图说话:

注意,这里的gns的subdomains是grid.localmain,和我们之前在dhcp配置中的子域一致。

注,这里如果看不到asm的disk,change discovery path一下即可。

注,如果你之前的GNS环境没建立好,如dhcp有问题,或者dns有问题,那么在这一步执行root.sh的脚本的时候很有可能报错。

……

CRS-2672: Attempting to start 'ora.DATA.dg' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.DATA.dg' on 'ol6-121-rac1' succeeded

2013/07/12 15:37:01 CLSRSC-349: The Oracle Clusterware stack failed to stop <<<<<<<<

Died at /u01/app/12.1.0.1/grid/crs/install/crsinstall.pm line 310. <<<<<<<<<<

The command '/u01/app/12.1.0.1/grid/perl/bin/perl -I/u01/app/12.1.0.1/grid/perl/lib -I/u01/app/12.1.0.1/grid/crs/install /u01/app/12.1.0.1/grid/crs/install/rootcrs.pl ' execution failed

[root@ol6-121-rac1 ~]#

查log可以看到如下,但是根据相关的报错去mos或者网上查,基本没有什么信息,(我不知道现在有没有,但是1年前我去查的时候,资料基本是空白),只有11g cluster安装的时候,有相似的报错。报错的原因是GNS的配置问题。因此,花了比较大的力气,配置了DNS和dhcp,搞好了GNS,才使得安装顺利。如果你也安装flex cluster,请一定要配置好网络配置好GNS。

……

> CRS-2794: Shutdown of Cluster Ready Services-managed resources on 'ol6-121-rac1' has failed

> CRS-2675: Stop of 'ora.crsd' on 'ol6-121-rac1' failed

> CRS-2799: Failed to shut down resource 'ora.crsd' on 'ol6-121-rac1'

> CRS-2795: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac1' has failed

> CRS-4687: Shutdown command has completed with errors.

> CRS-4000: Command Stop failed, or completed with errors.

>End Command output

2013-07-12 15:37:00: The return value of stop of CRS: 1

2013-07-12 15:37:00: Executing cmd: /u01/app/12.1.0.1/grid/bin/crsctl check crs

2013-07-12 15:37:01: Command output:

> CRS-4638: Oracle High Availability Services is online

> CRS-4537: Cluster Ready Services is online

> CRS-4529: Cluster Synchronization Services is online

> CRS-4533: Event Manager is online

>End Command output

2013-07-12 15:37:01: Executing cmd: /u01/app/12.1.0.1/grid/bin/clsecho -p has -f clsrsc -m 349

2013-07-12 15:37:01: Command output:

> CLSRSC-349: The Oracle Clusterware stack failed to stop

>End Command output

2013-07-12 15:37:01: Executing cmd: /u01/app/12.1.0.1/grid/bin/clsecho -p has -f clsrsc -m 349

2013-07-12 15:37:01: Command output:

> CLSRSC-349: The Oracle Clusterware stack failed to stop

>End Command output

2013-07-12 15:37:01: CLSRSC-349: The Oracle Clusterware stack failed to stop

2013-07-12 15:37:01: ###### Begin DIE Stack Trace ######

2013-07-12 15:37:01: Package File Line Calling

2013-07-12 15:37:01: --------------- -------------------- ---- ----------

2013-07-12 15:37:01: 1: main rootcrs.pl 211 crsutils::dietrap

2013-07-12 15:37:01: 2: crsinstall crsinstall.pm 310 main::__ANON__

2013-07-12 15:37:01: 3: crsinstall crsinstall.pm 219 crsinstall::CRSInstall

2013-07-12 15:37:01: 4: main rootcrs.pl 334 crsinstall::new

2013-07-12 15:37:01: ####### End DIE Stack Trace #######

2013-07-12 15:37:01: ROOTCRS_STACK checkpoint has failed

2013-07-12 15:37:01: Running as user oracle: /u01/app/12.1.0.1/grid/bin/cluutil -ckpt -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_STACK -state FAIL

2013-07-12 15:37:01: s_run_as_user2: Running /bin/su oracle -c ' /u01/app/12.1.0.1/grid/bin/cluutil -ckpt -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_STACK -state FAIL '

2013-07-12 15:37:01: Removing file /tmp/fileBn895c

2013-07-12 15:37:01: Successfully removed file: /tmp/fileBn895c

2013-07-12 15:37:01: /bin/su successfully executed

2013-07-12 15:37:01: Succeeded in writing the checkpoint:'ROOTCRS_STACK' with status:FAIL

2013-07-12 15:37:01: Running as user oracle: /u01/app/12.1.0.1/grid/bin/cluutil -ckpt -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_STACK -state FAIL

2013-07-12 15:37:01: s_run_as_user2: Running /bin/su oracle -c ' /u01/app/12.1.0.1/grid/bin/cluutil -ckpt -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_STACK -state FAIL '

2013-07-12 15:37:01: Removing file /tmp/fileYx9kDX

2013-07-12 15:37:01: Successfully removed file: /tmp/fileYx9kDX

2013-07-12 15:37:01: /bin/su successfully executed

2013-07-12 15:37:01: Succeeded in writing the checkpoint:'ROOTCRS_STACK' with status:FAIL

但是如果没有问题,执行的时候如下面这样,那么恭喜你,直接进入下一步配置asm了。

[root@ol6-121-rac1 12.1.0.1]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@ol6-121-rac1 12.1.0.1]#

[root@ol6-121-rac1 12.1.0.1]#

[root@ol6-121-rac1 12.1.0.1]# /u01/app/12.1.0.1/grid/root.sh

Performing root user operation for Oracle 12c

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/12.1.0.1/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/12.1.0.1/grid/crs/install/crsconfig_params

2013/08/20 00:23:03 CLSRSC-363: User ignored prerequisites during installation

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

2013/08/20 00:24:00 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.conf'

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac1' succeeded

ASM created and started successfully.

Disk Group DATA created successfully.

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac1' succeeded

CRS-4256: Updating the profile

Successful addition of voting disk 7998f05a43964f77bfe185cd468262c4.

Successfully replaced voting disk group with +DATA.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 7998f05a43964f77bfe185cd468262c4 (/dev/asm-disk1) [DATA]

Located 1 voting disk(s).

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.crsd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.crsd' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.storage' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.storage' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.evmd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.asm' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.gpnpd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.cssd' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.gipcd' on 'ol6-121-rac1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac1' succeeded

CRS-2789: Cannot stop resource 'ora.diskmon' as it is not running on server 'ol6-121-rac1'

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.ctssd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.ctssd' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac1' succeeded

CRS-6023: Starting Oracle Cluster Ready Services-managed resources

CRS-6017: Processing resource auto-start for servers: ol6-121-rac1

CRS-6016: Resource auto-start has completed for server ol6-121-rac1

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2013/08/20 00:30:20 CLSRSC-343: Successfully started Oracle clusterware stack

CRS-2672: Attempting to start 'ora.net1.network' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.net1.network' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gns.vip' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gns.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gns' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gns' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.DATA.dg' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.DATA.dg' on 'ol6-121-rac1' succeeded

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.crsd' on 'ol6-121-rac1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.ol6-121-rac1.vip' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN3.lsnr' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.DATA.dg' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.gns' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.cvu' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.scan1.vip' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.scan2.vip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.LISTENER_SCAN3.lsnr' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.scan3.vip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.cvu' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.scan1.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.DATA.dg' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.ol6-121-rac1.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.scan3.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.scan2.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.gns' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.gns.vip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.gns.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.ons' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.net1.network' on 'ol6-121-rac1' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'ol6-121-rac1' has completed

CRS-2677: Stop of 'ora.crsd' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.evmd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.storage' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.storage' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.cssd' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.gipcd' on 'ol6-121-rac1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac1' succeeded

CRS-2789: Cannot stop resource 'ora.diskmon' as it is not running on server 'ol6-121-rac1'

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.ctssd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.ctssd' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.asm' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac1' succeeded

CRS-6023: Starting Oracle Cluster Ready Services-managed resources

CRS-6017: Processing resource auto-start for servers: ol6-121-rac1

CRS-2672: Attempting to start 'ora.cvu' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.ons' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.cvu' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.ons' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.scan2.vip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.scan3.vip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.scan1.vip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.ol6-121-rac1.vip' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.scan1.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.scan3.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN3.lsnr' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.scan2.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN2.lsnr' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.ol6-121-rac1.vip' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN3.lsnr' on 'ol6-121-rac1' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN2.lsnr' on 'ol6-121-rac1' succeeded

CRS-6016: Resource auto-start has completed for server ol6-121-rac1

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2013/08/20 00:37:55 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@ol6-121-rac1 12.1.0.1]#

--在node2上执行:

[root@ol6-121-rac2 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@ol6-121-rac2 ~]#

[root@ol6-121-rac2 ~]#

[root@ol6-121-rac2 ~]#

[root@ol6-121-rac2 ~]# /u01/app/12.1.0.1/grid/root.sh

Performing root user operation for Oracle 12c

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/12.1.0.1/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/12.1.0.1/grid/crs/install/crsconfig_params

2013/08/20 00:38:48 CLSRSC-363: User ignored prerequisites during installation

OLR initialization - successful

2013/08/20 00:39:20 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.conf'

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac2' succeeded

CRS-2789: Cannot stop resource 'ora.diskmon' as it is not running on server 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.ctssd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.ctssd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac2' succeeded

CRS-6017: Processing resource auto-start for servers: ol6-121-rac2

CRS-6016: Resource auto-start has completed for server ol6-121-rac2

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2013/08/20 00:43:36 CLSRSC-343: Successfully started Oracle clusterware stack

2013/08/20 00:43:41 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@ol6-121-rac2 ~]#

Broadcast message from root@ol6-121-rac2

asm的配置,不需要该什么参数,由于我们节点2是leaf node,所以不会有启动的asm instance。

等asm配置完,flex cluster也就安装完毕了。

好了,flex cluster,安装完毕,在继续安装db之前,我们用ifconfig来看看其当前的网络情况和相关资源:

node 1是hub node,上面的情况是:

eth0 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.121 Bcast:192.168.56.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe06:724b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1204 errors:0 dropped:0 overruns:0 frame:0

TX packets:1211 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:166853 (162.9 KiB) TX bytes:208611 (203.7 KiB)

eth0:1 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.108 Bcast:192.168.56.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth0:2 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.12 Bcast:192.168.56.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth0:3 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.14 Bcast:192.168.56.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth0:4 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.13 Bcast:192.168.56.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth0:5 Link encap:Ethernet HWaddr 08:00:27:06:72:4B

inet addr:192.168.56.11 Bcast:192.168.56.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth1 Link encap:Ethernet HWaddr 08:00:27:4D:0D:02

inet addr:192.168.57.31 Bcast:192.168.57.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe4d:d02/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1147 errors:0 dropped:0 overruns:0 frame:0

TX packets:1858 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:523883 (511.6 KiB) TX bytes:1344884 (1.2 MiB)

eth1:1 Link encap:Ethernet HWaddr 08:00:27:4D:0D:02

inet addr:169.254.2.250 Bcast:169.254.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:1871 errors:0 dropped:0 overruns:0 frame:0

TX packets:1871 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2288895 (2.1 MiB) TX bytes:2288895 (2.1 MiB)

[oracle@ol6-121-rac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora.DATA.dg ora....up.type ONLINE ONLINE ol6-...rac1

ora....ER.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....AF.lsnr ora....er.type ONLINE ONLINE ol6-...rac2

ora....N1.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....N2.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....N3.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora.asm ora.asm.type ONLINE ONLINE ol6-...rac1

ora.cvu ora.cvu.type ONLINE ONLINE ol6-...rac1

ora.gns ora.gns.type ONLINE ONLINE ol6-...rac1

ora.gns.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora....network ora....rk.type ONLINE ONLINE ol6-...rac1

ora.oc4j ora.oc4j.type OFFLINE OFFLINE

ora....C1.lsnr application ONLINE ONLINE ol6-...rac1

ora....ac1.ons application ONLINE ONLINE ol6-...rac1

ora....ac1.vip ora....t1.type ONLINE ONLINE ol6-...rac1

ora.ons ora.ons.type ONLINE ONLINE ol6-...rac1

ora.proxy_advm ora....vm.type ONLINE ONLINE ol6-...rac1

ora.scan1.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora.scan2.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora.scan3.vip ora....ip.type ONLINE ONLINE ol6-...rac1

[oracle@ol6-121-rac1 ~]$ ps -ef |grep asm

root 2416 2 0 22:45 ? 00:00:00 [asmWorkerThread]

root 2417 2 0 22:45 ? 00:00:00 [asmWorkerThread]

root 2418 2 0 22:45 ? 00:00:00 [asmWorkerThread]

root 2419 2 0 22:45 ? 00:00:00 [asmWorkerThread]

root 2420 2 0 22:45 ? 00:00:00 [asmWorkerThread]

oracle 2746 1 0 22:46 ? 00:00:00 asm_pmon_+ASM1

oracle 2748 1 0 22:46 ? 00:00:00 asm_psp0_+ASM1

oracle 2750 1 5 22:46 ? 00:00:22 asm_vktm_+ASM1

oracle 2754 1 0 22:46 ? 00:00:00 asm_gen0_+ASM1

oracle 2756 1 0 22:46 ? 00:00:00 asm_mman_+ASM1

oracle 2760 1 0 22:46 ? 00:00:00 asm_diag_+ASM1

oracle 2762 1 0 22:46 ? 00:00:00 asm_ping_+ASM1

oracle 2764 1 0 22:46 ? 00:00:00 asm_dia0_+ASM1

oracle 2766 1 0 22:46 ? 00:00:00 asm_lmon_+ASM1

oracle 2768 1 0 22:46 ? 00:00:00 asm_lmd0_+ASM1

oracle 2770 1 0 22:46 ? 00:00:02 asm_lms0_+ASM1

oracle 2774 1 0 22:46 ? 00:00:00 asm_lmhb_+ASM1

oracle 2776 1 0 22:46 ? 00:00:00 asm_lck1_+ASM1

oracle 2778 1 0 22:46 ? 00:00:00 asm_gcr0_+ASM1

oracle 2780 1 0 22:46 ? 00:00:00 asm_dbw0_+ASM1

oracle 2782 1 0 22:46 ? 00:00:00 asm_lgwr_+ASM1

oracle 2784 1 0 22:46 ? 00:00:00 asm_ckpt_+ASM1

oracle 2786 1 0 22:46 ? 00:00:00 asm_smon_+ASM1

oracle 2788 1 0 22:46 ? 00:00:00 asm_lreg_+ASM1

oracle 2790 1 0 22:46 ? 00:00:00 asm_rbal_+ASM1

oracle 2792 1 0 22:46 ? 00:00:00 asm_gmon_+ASM1

oracle 2794 1 0 22:46 ? 00:00:00 asm_mmon_+ASM1

oracle 2796 1 0 22:46 ? 00:00:00 asm_mmnl_+ASM1

oracle 2798 1 0 22:46 ? 00:00:00 asm_lck0_+ASM1

oracle 2886 1 0 22:47 ? 00:00:00 asm_asmb_+ASM1

oracle 2888 1 0 22:47 ? 00:00:00 oracle+ASM1_asmb_+asm1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

oracle 6606 3162 0 22:54 pts/0 00:00:00 grep asm

[oracle@ol6-121-rac1 ~]$ ps -ef |grep tns

root 10 2 0 22:44 ? 00:00:00 [netns]

oracle 3146 1 0 22:47 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

oracle 3156 1 0 22:47 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr LISTENER_SCAN1 -no_crs_notify -inherit

oracle 3187 1 0 22:47 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit

oracle 3199 1 0 22:47 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit

oracle 3237 1 0 22:47 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

oracle 6608 3162 0 22:54 pts/0 00:00:00 grep tns

[oracle@ol6-121-rac1 ~]$

[oracle@ol6-121-rac1 ~]$ ps -ef |grep ohas

root 1162 1 0 22:45 ? 00:00:00 /bin/sh /etc/init.d/init.ohasd run

root 2061 1 1 22:45 ? 00:00:09 /u01/app/12.1.0.1/grid/bin/ohasd.bin reboot

oracle 6611 3162 0 22:54 pts/0 00:00:00 grep ohas

[oracle@ol6-121-rac1 ~]$

node 2是leaf node,上面的情况是:

eth0 Link encap:Ethernet HWaddr 08:00:27:8E:CE:20

inet addr:192.168.56.122 Bcast:192.168.56.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe8e:ce20/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1049 errors:0 dropped:0 overruns:0 frame:0

TX packets:854 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:157826 (154.1 KiB) TX bytes:135961 (132.7 KiB)

eth1 Link encap:Ethernet HWaddr 08:00:27:3B:C4:0A

inet addr:192.168.57.32 Bcast:192.168.57.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fe3b:c40a/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1886 errors:0 dropped:0 overruns:0 frame:0

TX packets:1242 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1353953 (1.2 MiB) TX bytes:543170 (530.4 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:408 (408.0 b) TX bytes:408 (408.0 b)

[oracle@ol6-121-rac2 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora.DATA.dg ora....up.type ONLINE ONLINE ol6-...rac1

ora....ER.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....AF.lsnr ora....er.type ONLINE ONLINE ol6-...rac2

ora....N1.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....N2.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora....N3.lsnr ora....er.type ONLINE ONLINE ol6-...rac1

ora.asm ora.asm.type ONLINE ONLINE ol6-...rac1

ora.cvu ora.cvu.type ONLINE ONLINE ol6-...rac1

ora.gns ora.gns.type ONLINE ONLINE ol6-...rac1

ora.gns.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora....network ora....rk.type ONLINE ONLINE ol6-...rac1

ora.oc4j ora.oc4j.type OFFLINE OFFLINE

ora....C1.lsnr application ONLINE ONLINE ol6-...rac1

ora....ac1.ons application ONLINE ONLINE ol6-...rac1

ora....ac1.vip ora....t1.type ONLINE ONLINE ol6-...rac1

ora.ons ora.ons.type ONLINE ONLINE ol6-...rac1

ora.proxy_advm ora....vm.type ONLINE ONLINE ol6-...rac1

ora.scan1.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora.scan2.vip ora....ip.type ONLINE ONLINE ol6-...rac1

ora.scan3.vip ora....ip.type ONLINE ONLINE ol6-...rac1

[oracle@ol6-121-rac2 ~]$ ps -ef |grep asm

oracle 2663 2566 0 22:55 pts/0 00:00:00 grep asm

[oracle@ol6-121-rac2 ~]$ ps -ef |grep tns

root 10 2 0 22:46 ? 00:00:00 [netns]

oracle 2641 1 0 22:53 ? 00:00:00 /u01/app/12.1.0.1/grid/bin/tnslsnr LISTENER_LEAF -no_crs_notify -inherit

oracle 2666 2566 0 22:55 pts/0 00:00:00 grep tns

[oracle@ol6-121-rac2 ~]$ ps -ef |grep ohas

root 1124 1 0 22:46 ? 00:00:00 /bin/sh /etc/init.d/init.ohasd run

root 2058 1 2 22:47 ? 00:00:14 /u01/app/12.1.0.1/grid/bin/ohasd.bin reboot

oracle 2668 2566 0 22:55 pts/0 00:00:00 grep ohas

[oracle@ol6-121-rac2 ~]$

12c flex cluster小记(2)

装完了cluster,我们来装DB。在上一篇 我已经说了,在leaf node上是不允许装DB的(至少目前如此),所以我们只能在hub node上安装。

你可以在hub node上装单实例DB,也可以装rac one node,也可以装rac。我这里想安装成2节点的rac。但是目前我只有2个node,一个hub node,一个leaf node,要装rac必须在hub node上。所以我需要将一个leaf node转换成hub node,再装2节点的rac数据库。

我们先来用一下常用命令先检查一下:

[oracle@ol6-121-rac1 ~]$ srvctl status vip -node ol6-121-rac1

VIP 192.168.56.11 is enabled

VIP 192.168.56.11 is running on node: ol6-121-rac1

[oracle@ol6-121-rac1 ~]$ srvctl status vip -node ol6-121-rac2

VIP 192.168.56.19 is enabled

VIP 192.168.56.19 is running on node: ol6-121-rac2

2. SCAN运行正常,2个scanIP是在node1,1个scan IP在节点2;

[oracle@ol6-121-rac1 ~]$ srvctl status scan -all

SCAN VIP scan1 is enabled

SCAN VIP scan1 is running on node ol6-121-rac2

SCAN VIP scan2 is enabled

SCAN VIP scan2 is running on node ol6-121-rac1

SCAN VIP scan3 is enabled

SCAN VIP scan3 is running on node ol6-121-rac1

3. DNS解析SCAN的IP分别是192.168.56.12、192.168.56.13和192.168.56.14.

[oracle@ol6-121-rac1 ~]$ nslookup ol6-121-cluster-scan.grid.localdomain

Server: 192.168.56.1

Address: 192.168.56.1#53

Non-authoritative answer:

Name: ol6-121-cluster-scan.grid.localdomain

Address: 192.168.56.13

Name: ol6-121-cluster-scan.grid.localdomain

Address: 192.168.56.12

Name: ol6-121-cluster-scan.grid.localdomain

Address: 192.168.56.14

4. GNS运行正常,运行在节点1上。

[oracle@ol6-121-rac1 ~]$ srvctl status gns -v

GNS is running on node ol6-121-rac1.

GNS is enabled on node ol6-121-rac1.

5. GNS的VIP是192.168.56.108,这是固定的,我在前一篇关于flex cluster的第四点中也说明了GNS VIP需要固定的。

[oracle@ol6-121-rac1 ~]$ nslookup gns-vip.localdomain

Server: 192.168.56.1

Address: 192.168.56.1#53

Name: gns-vip.localdomain

Address: 192.168.56.108

6. 进一步详细检查GNS的运行情况

[root@ol6-121-rac1 ~]# srvctl config gns -a -l

GNS is enabled.

GNS is listening for DNS server requests on port 53

GNS is using port 5,353 to connect to mDNS

GNS status: OK

Domain served by GNS: grid.localdomain

GNS version: 12.1.0.1.0

Globally unique identifier of the cluster where GNS is running: 3d52171d97fddf8cff384db43f6d1459

Name of the cluster where GNS is running: ol6-121-cluster

Cluster type: server.

GNS log level: 1.

GNS listening addresses: tcp://192.168.56.108:38182.

[root@ol6-121-rac1 ~]#

关于其他资源的情况,如crsctl stat res -p显示的所有结果,由于太长,我附在这篇文章最后处了。

我们来开始leaf转hub,注意,必须要保证cluster工作在flex模式下,asm也工作在flex模式下。

我们先检查一下上述两个是否工作在flex模式下:

[oracle@ol6-121-rac1 ~]$ crsctl get cluster mode status

Cluster is running in "flex" mode

--检查asm:

[oracle@ol6-121-rac1 ~]$ asmcmd

ASMCMD> showclustermode

ASM cluster : Flex mode enabled

ASMCMD>

再看看当前工作在哪个模式下,我们可以看到node2是leaf node:

[oracle@ol6-121-rac1 ~]$ crsctl get node role config

Node 'ol6-121-rac1' configured role is 'hub'

--节点2

[root@ol6-121-rac2 ~]# crsctl get node role config

Node 'ol6-121-rac2' configured role is 'leaf'

开始转换,我们只需将node2转换成hub node。

其实步骤只有2个,第一,crsctl set node role hub,第二,重启crs。

我们开始LEAF -> HUB:

[root@ol6-121-rac2 ~]# crsctl set node role hub

CRS-4408: Node 'ol6-121-rac2' configured role successfully changed; restart Oracle High Availability Services for new role to take effect.

--重启crs,先stop crs。注意重启crs需要到root用户下。

[root@ol6-121-rac2 ~]# crsctl stop crs

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.crsd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.crsd' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.storage' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.storage' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.evmd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.gpnpd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.evmd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.cssd' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.gipcd' on 'ol6-121-rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

--stop完成后,在start crs

[root@ol6-121-rac2 ~]# crsctl start crs -wait

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac2' succeeded

CRS-2789: Cannot stop resource 'ora.diskmon' as it is not running on server 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.ctssd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.ctssd' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.asm' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac2' succeeded

CRS-6017: Processing resource auto-start for servers: ol6-121-rac2

CRS-2672: Attempting to start 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1'

CRS-2673: Attempting to stop 'ora.ol6-121-rac2.vip' on 'ol6-121-rac1'

CRS-2672: Attempting to start 'ora.ons' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1' succeeded

CRS-2673: Attempting to stop 'ora.scan1.vip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.ol6-121-rac2.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.ol6-121-rac2.vip' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.scan1.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.scan1.vip' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.scan1.vip' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.ol6-121-rac2.vip' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER.lsnr' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.ons' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.LISTENER.lsnr' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.asm' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.proxy_advm' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.proxy_advm' on 'ol6-121-rac2' succeeded

CRS-6016: Resource auto-start has completed for server ol6-121-rac2

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

[root@ol6-121-rac2 ~]#

--检查集群role是否已经转换过来:

[root@ol6-121-rac2 ~]# crsctl get node role config

Node 'ol6-121-rac2' configured role is 'hub'

[root@ol6-121-rac2 ~]#

看到node2现在已经是hub node。我们可以装RAC DB了。

安装DB,我会另外再分开来一篇。这里先再讲一下如何把hub node转回去leaf node。

其实很简单,步骤也是2步。第一crsctl set node role leaf,第二步重启crs。

我们开始HUB -> LEAF:

[oracle@ol6-121-rac1 ~]$ crsctl get node role config

Node 'ol6-121-rac1' configured role is 'hub'

[oracle@ol6-121-rac2 ~]$ crsctl get node role config

Node 'ol6-121-rac2' configured role is 'hub'

--将节点2转成leaf node

[root@ol6-121-rac2 ~]# crsctl set node role leaf

CRS-4408: Node 'ol6-121-rac2' configured role successfully changed; restart Oracle High Availability Services for new role to take effect.

--重启crs,先stop crs

[root@ol6-121-rac2 ~]# crsctl stop crs

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.crsd' on 'ol6-121-rac2'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.DATA.dg' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.proxy_advm' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.ol6-121-rac2.vip' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.scan1.vip' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.ol6-121-rac2.vip' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.ol6-121-rac2.vip' on 'ol6-121-rac1'

CRS-2677: Stop of 'ora.scan1.vip' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.scan1.vip' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.scan1.vip' on 'ol6-121-rac1' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1'

CRS-2676: Start of 'ora.ol6-121-rac2.vip' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.proxy_advm' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'ol6-121-rac1' succeeded

CRS-2677: Stop of 'ora.DATA.dg' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.asm' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.ons' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.net1.network' on 'ol6-121-rac2' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'ol6-121-rac2' has completed

CRS-2677: Stop of 'ora.crsd' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.evmd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.storage' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'ol6-121-rac2'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.storage' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.drivers.acfs' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.evmd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'ol6-121-rac2' succeeded

CRS-2677: Stop of 'ora.asm' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.cssd' on 'ol6-121-rac2' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'ol6-121-rac2'

CRS-2677: Stop of 'ora.gipcd' on 'ol6-121-rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-121-rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

--stop完成后,在start crs

[root@ol6-121-rac2 ~]# crsctl start crs -wait

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.evmd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.mdnsd' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.evmd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gpnpd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.gipcd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssdmonitor' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.diskmon' on 'ol6-121-rac2' succeeded

CRS-2789: Cannot stop resource 'ora.diskmon' as it is not running on server 'ol6-121-rac2'

CRS-2676: Start of 'ora.cssd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'ol6-121-rac2'

CRS-2672: Attempting to start 'ora.ctssd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'ol6-121-rac2' succeeded

CRS-2676: Start of 'ora.ctssd' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.storage' on 'ol6-121-rac2' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'ol6-121-rac2'

CRS-2676: Start of 'ora.crsd' on 'ol6-121-rac2' succeeded

CRS-6017: Processing resource auto-start for servers: ol6-121-rac2

CRS-6016: Resource auto-start has completed for server ol6-121-rac2

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

[root@ol6-121-rac2 ~]#

附:crsctl stat res -p的输出,所有的资源情况:

NAME=ora.ASMNET1LSNR_ASM.lsnr

TYPE=ora.asm_listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=%CRS_HOME%/bin/racgwrap%CRS_SCRIPT_SUFFIX%

ACTION_TIMEOUT=60

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=restore

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=Oracle ASM Listener resource

ENABLED=1

ENDPOINTS=TCP:1521

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

ORACLE_HOME=%CRS_HOME%

PORT=1521

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.hub.category

START_CONCURRENCY=0

START_DEPENDENCIES=weak(global:ora.gns)

START_TIMEOUT=180

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=

STOP_TIMEOUT=0

SUBNET=192.168.57.0

TYPE_VERSION=1.1

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USR_ORA_ENV=

USR_ORA_OPI=false

VERSION=12.1.0.1.0

NAME=ora.DATA.dg

TYPE=ora.diskgroup.type

ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTION_TIMEOUT=60

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=never

CHECK_INTERVAL=300

CHECK_TIMEOUT=30

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=CRS resource type definition for ASM disk group resource

ENABLED=1

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.hub.category

START_CONCURRENCY=0

START_DEPENDENCIES=pullup:always(ora.asm) hard(ora.asm)

START_TIMEOUT=900

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=hard(intermediate:ora.asm)

STOP_TIMEOUT=180

TYPE_VERSION=1.2

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USR_ORA_ENV=

USR_ORA_OPI=false

USR_ORA_STOP_MODE=

VERSION=12.1.0.1.0

NAME=ora.LISTENER.lsnr

TYPE=ora.listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=%CRS_HOME%/bin/racgwrap%CRS_SCRIPT_SUFFIX%

ACTION_TIMEOUT=60

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=ora.%CRS_CSS_NODENAME_LOWER_CASE%.LISTENER_%CRS_CSS_NODENAME_UPPER_CASE%.lsnr

AUTO_START=restore

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=Oracle Listener resource

ENABLED=1

ENDPOINTS=TCP:1521

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

ORACLE_HOME=%CRS_HOME%

PORT=1521

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.hub.category

START_CONCURRENCY=0

START_DEPENDENCIES=hard(type:ora.cluster_vip_net1.type) pullup(type:ora.cluster_vip_net1.type)

START_TIMEOUT=180

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=hard(intermediate:type:ora.cluster_vip_net1.type)

STOP_TIMEOUT=0

TYPE_VERSION=1.2

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USR_ORA_ENV=

USR_ORA_OPI=false

VERSION=12.1.0.1.0

NAME=ora.LISTENER_LEAF.lsnr

TYPE=ora.leaf_listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=%CRS_HOME%/bin/racgwrap%CRS_SCRIPT_SUFFIX%

ACTION_TIMEOUT=60

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=never

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=Oracle LEAF Listener resource

ENABLED=1

ENDPOINTS=TCP:1521

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

ORACLE_HOME=%CRS_HOME%

PORT=1521

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.leaf.category

START_CONCURRENCY=0

START_DEPENDENCIES=

START_TIMEOUT=180

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=

STOP_TIMEOUT=0

SUBNET=

TYPE_VERSION=1.1

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USR_ORA_ENV=

USR_ORA_OPI=false

VERSION=12.1.0.1.0

NAME=ora.LISTENER_SCAN1.lsnr

TYPE=ora.scan_listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:r-x,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTION_TIMEOUT=60

ACTIVE_PLACEMENT=0

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=restore

CARDINALITY=1

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=scan_listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=Oracle SCAN listener resource

ENABLED=1

ENDPOINTS=TCP:1521

FAILOVER_DELAY=0

FAILURE_INTERVAL=0

FAILURE_THRESHOLD=0

HOSTING_MEMBERS=

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NETNUM=1

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PLACEMENT=restricted

PORT=1521

PROFILE_CHANGE_TEMPLATE=

REGISTRATION_INVITED_NODES=

REGISTRATION_INVITED_SUBNETS=

RELOCATE_BY_DEPENDENCY=1

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.hub.category

SERVER_POOLS=*

START_CONCURRENCY=0

START_DEPENDENCIES=hard(ora.scan1.vip) dispersion:active(type:ora.scan_listener.type) pullup(ora.scan1.vip)

START_TIMEOUT=180

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=hard(intermediate:ora.scan1.vip)

STOP_TIMEOUT=0

TYPE_VERSION=2.2

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USE_STICKINESS=0

USR_ORA_ENV=

USR_ORA_OPI=false

VERSION=12.1.0.1.0

NAME=ora.LISTENER_SCAN2.lsnr

TYPE=ora.scan_listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:r-x,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTION_TIMEOUT=60

ACTIVE_PLACEMENT=0

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=restore

CARDINALITY=1

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=scan_listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1

DELETE_TIMEOUT=60

DESCRIPTION=Oracle SCAN listener resource

ENABLED=1

ENDPOINTS=TCP:1521

FAILOVER_DELAY=0

FAILURE_INTERVAL=0

FAILURE_THRESHOLD=0

HOSTING_MEMBERS=

INSTANCE_FAILOVER=1

INTERMEDIATE_TIMEOUT=0

LOAD=1

LOGGING_LEVEL=1

MODIFY_TIMEOUT=60

NETNUM=1

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PLACEMENT=restricted

PORT=1521

PROFILE_CHANGE_TEMPLATE=

REGISTRATION_INVITED_NODES=

REGISTRATION_INVITED_SUBNETS=

RELOCATE_BY_DEPENDENCY=1

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

SERVER_CATEGORY=ora.hub.category

SERVER_POOLS=*

START_CONCURRENCY=0

START_DEPENDENCIES=hard(ora.scan2.vip) dispersion:active(type:ora.scan_listener.type) pullup(ora.scan2.vip)

START_TIMEOUT=180

STATE_CHANGE_TEMPLATE=

STOP_CONCURRENCY=0

STOP_DEPENDENCIES=hard(intermediate:ora.scan2.vip)

STOP_TIMEOUT=0

TYPE_VERSION=2.2

UPTIME_THRESHOLD=1d

USER_WORKLOAD=no

USE_STICKINESS=0

USR_ORA_ENV=

USR_ORA_OPI=false

VERSION=12.1.0.1.0

NAME=ora.LISTENER_SCAN3.lsnr

TYPE=ora.scan_listener.type

ACL=owner:oracle:rwx,pgrp:oinstall:r-x,other::r--

ACTIONS=

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTION_TIMEOUT=60

ACTIVE_PLACEMENT=0

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALERT_TEMPLATE=

ALIAS_NAME=

AUTO_START=restore

CARDINALITY=1

CHECK_INTERVAL=60

CHECK_TIMEOUT=120

CLEAN_TIMEOUT=60

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=scan_listener) PROPERTY(LISTENER_NAME=PARSE(%NAME%, ., 2))

DEGREE=1